Learning to produce with AI, not just consume it

We've been deep in the trenches working to transition our existing client base to an AI-native way of working, and we are ready to start sharing our learnings heading into 2026.

Here's one first takeaway:

If everyone has a calculator, everyone should be able to do basic math.

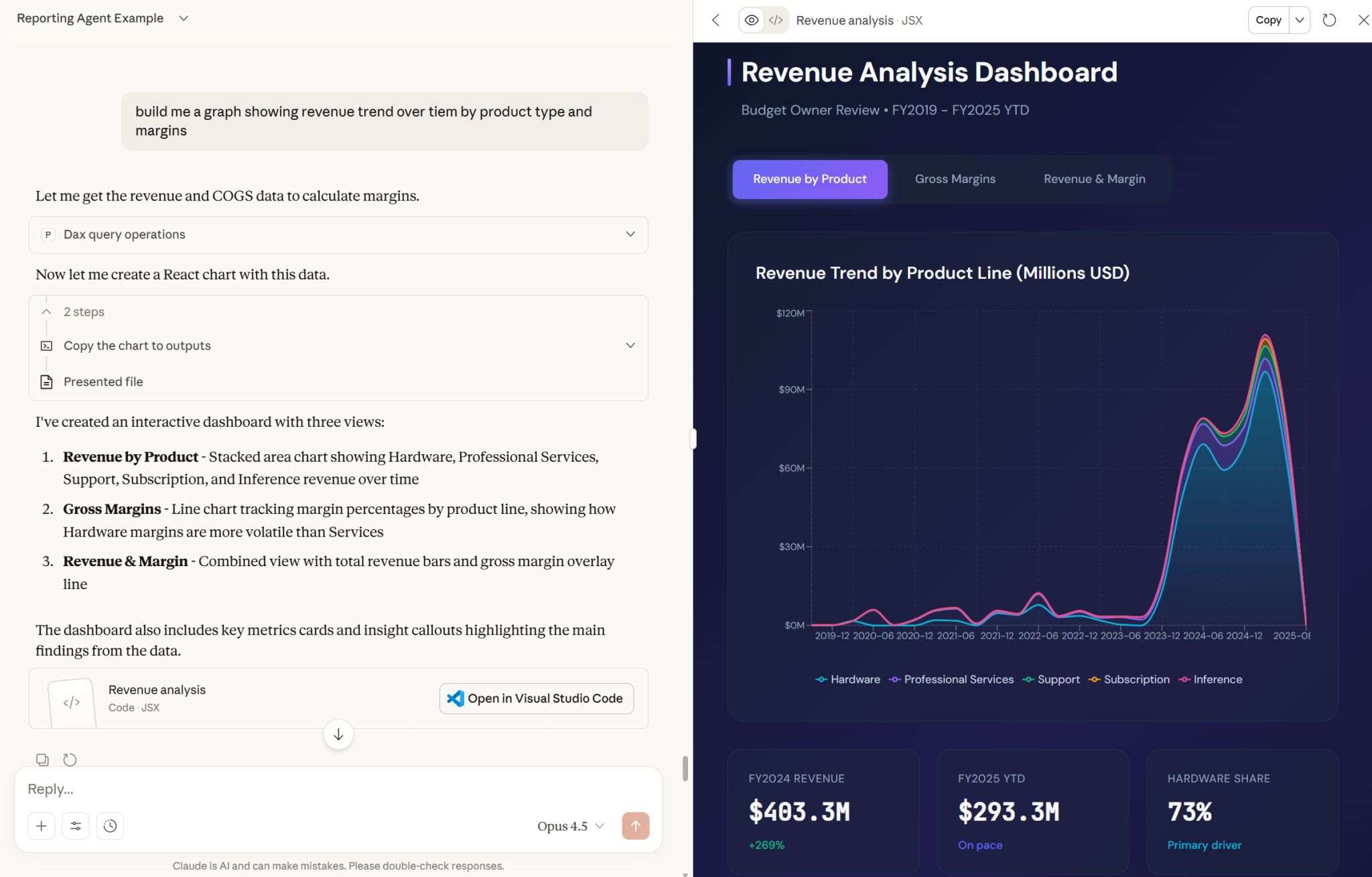

LLM can do many things, and one of the things they do very well is write code. They can write code like calculators can do math.

The problem is that despite these advancements, the vast majority of folks still look at coding as another world, accessible only to engineers. Few realize the power of the calculators in their hands.

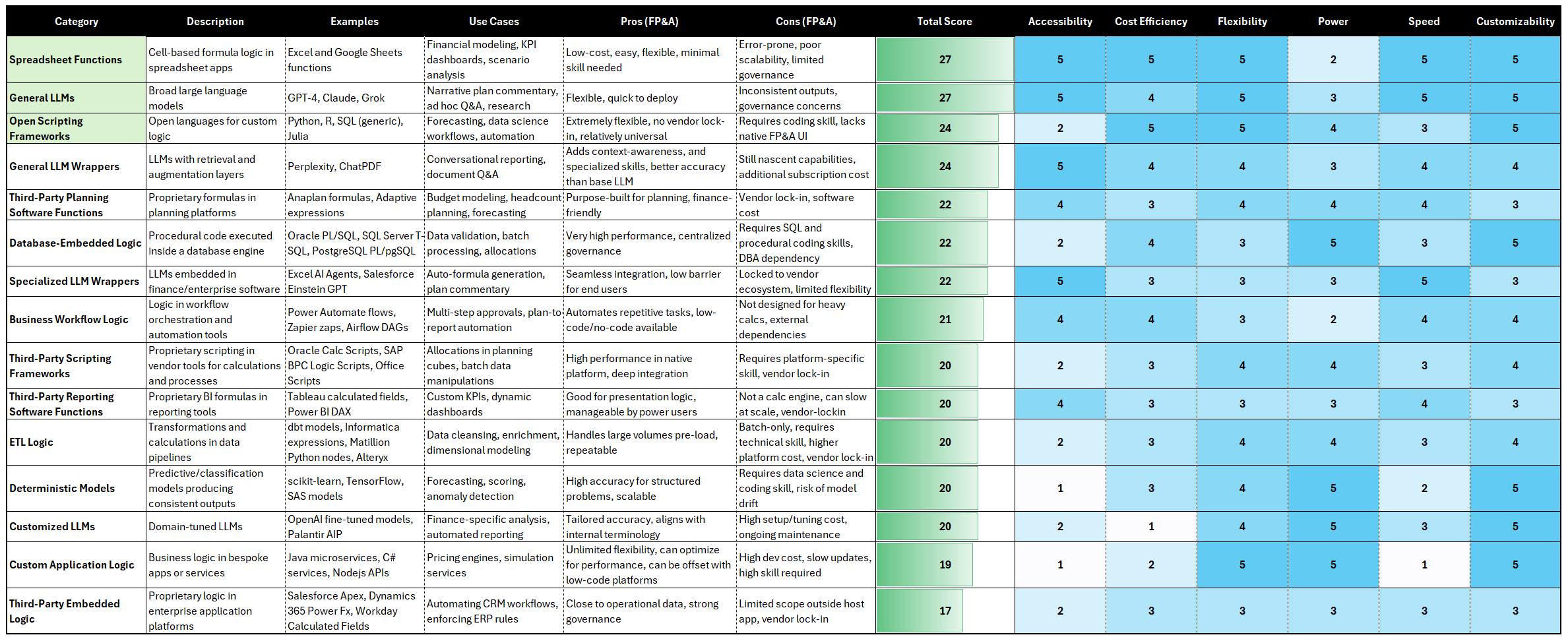

We see many folks paying consultants or buying expensive software to push the buttons for them. We also see a lot of folks using extremely expensive calculators to solve problems where the free built-in calculator on their phone is more than enough.

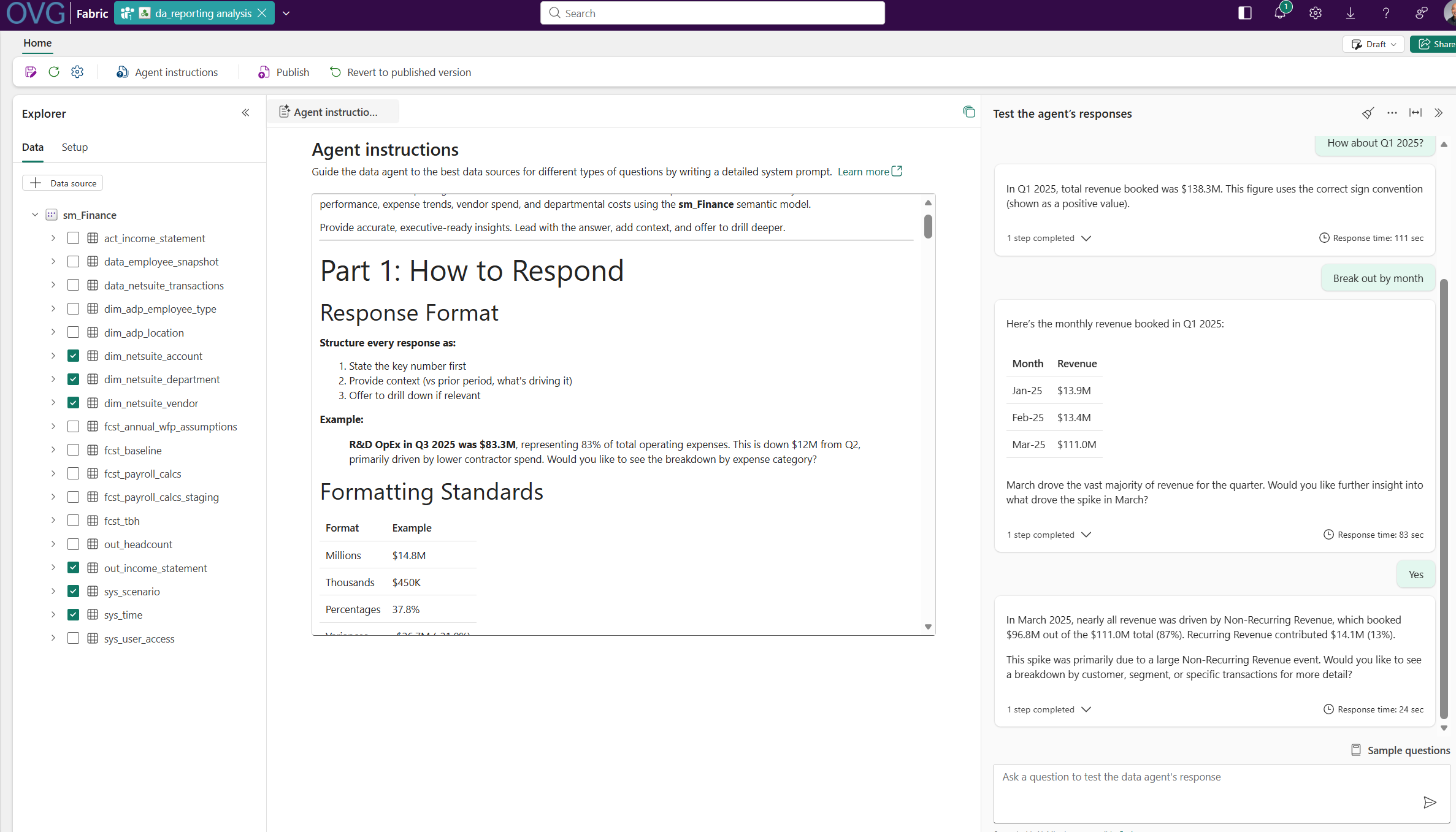

I was part of this group. I was never successful with building anything with full-code, but with the help of LLMs, I can now build apps, models, and data architecture in ways I could have only dreamt of before.

As a team, we have found that using the basic calculators to further understand the concepts of full-code, product-building and design, and systems-thinking delivers 10x more value than the fanciest calculators.

The main barrier to AI value realization, at least for Finance teams, is not more electricity, tokens, chips, or apps, it's the ability to expand beyond the restrictions of the cloud SaaS era, always subject to the limitations of a third-party UI.

Apps will continue to provide value, but the baseline of what is possible needs to be entirely reset, and more power needs to transfer to individuals vs. software providers.

Our objective at OVG is to help teams cross this chasm, establishing this new baseline so our clients can take advantage of the AI revolution to the fullest.